Sensor Data Collection: Building Scalable Multimodal Robotics Data

Defined.ai collected 225 hours of custom synchronized robotics sensor data for a leading AI research and deployment company.

TL;DR

A global AI innovator investing in robotics AI needed high-quality sensor data that captured natural human behavior: hands, body motion, gaze, depth and grip force. To de-risk scale-up, Defined.ai designed a three-tier workflow with parallel data-collection kits to produce 225 hours of finished, synchronized recordings (75 hours per kit). We delivered repeatable calibration and collection guidelines as well as weekly delivery reports.

Along the way, we built a sensor-data pipeline optimized for temporal synchronization, data annotation consistency and production scalability. We used industry-standard logging approaches for multimodal robotics datasets to store timestamped, multi-channel sensor streams efficiently.

Our customer: a leading AI research and deployment company building its robotics division

The client is already a major player in AI but, given the recent trends toward consumer robotics and autonomous systems, wants to develop next-generation manipulation capabilities for home environments. Think household assistance scenarios that require accurate perception and precise control across varied lighting, rooms, objects and operator styles.

The challenge: turning messy real-world behavior into training-ready sensor data

Training and evaluating modern humanoid robots and manipulation systems increasingly depend on multimodal sensor data reflecting real-world movements rather than slowed-down or staged demonstrations. But faithfully recreating your average household setting creates challenges:

- Multimodal alignment: gaze, Point of View (POV) video, depth, hand pose, body kinematics and force must be synchronized tightly enough for learning and replay.

- Consistency at scale: multiple operators and environments can quickly introduce “dataset drift” unless calibration and collection procedures are standardized.

- Diversity requirements: kitchens, living rooms, bathrooms, garages, different lighting, various people and objects, all need to be included with limited duplication.

- Annotation must be machine-usable: time-stamped task descriptions, frame/episode timestamps, global UTC time and location metadata all need to be coherent across every stream.

- Clip and task constraints: recordings needed both short task clips (30 seconds to 5 minutes) and long task clips (up to 30 minutes), with a maximum of 5 hours per exact task type and environment and natural human hand motions (no intentional slowdown or speedup).

In short, the client didn’t just need more data: they needed production-grade sensor data with repeatable procedures and documentation that could be scaled into ongoing collection.

Our capabilities: sensor data collection for robotics AI and autonomous systems

To meet the bar for robotics-grade data collection, we brought a flexible, field-ready capability stack:

1. Multimodal wearable + camera integration

- Smart-glasses capture can provide egocentric scene video and high-rate gaze signals and can include an Inertial Measurement Unit stream depending on configuration; modern systems are capable of gaze output up to ~200 Hz and scene video capture at ~30 Hz in some commercial implementations.

- Research-grade wearable eye trackers commonly support 50–100 Hz sampling and are built specifically for real-world behavior capture.

2. Body + hand motion capture workflows

- Inertial motion capture systems typically stream at rates such as ~60 Hz with operational considerations like wireless range and latency depending on setup.

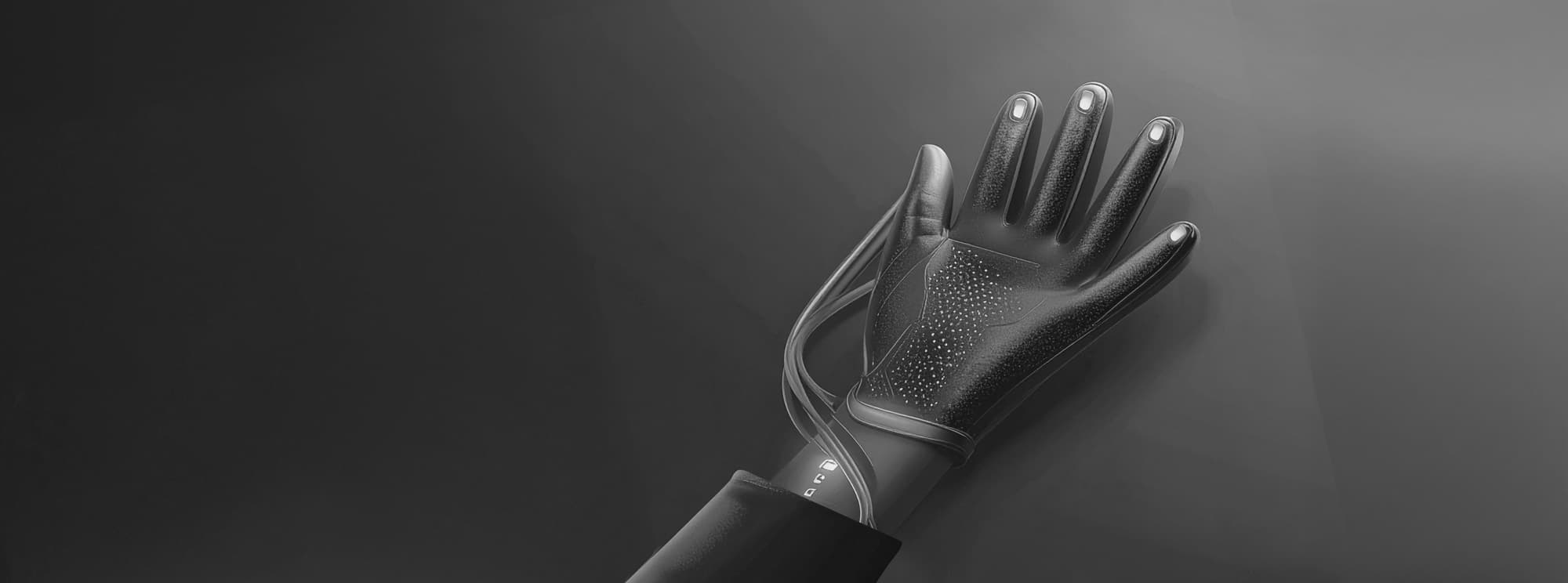

3. Force/pressure capture for grip and contact

- Wearable pressure/force sensing gloves can embed dozens of tactile sensors and detect very low pressures, supporting natural manipulation measurements.

4. Robotics-friendly logging and packaging

- MCAP is an open-source container format designed for multimodal log data with multiple timestamped channels; it is append-only and supports optional indexing/compression, and is widely used in robotics pipelines.

The solution: a three-tier workflow designed to scale sensor data collection

1) Goals

We structured the solution specifically to:

- Validate the proof of concept

- Develop robust guidelines

- Optimize the collection infrastructure

- Define collection and calibration procedures

- Identify the best hardware setup for scalable production

2) Equipment

To make scale economics practical without sacrificing data quality, we used three parallel kits each combining:

- Motion capture suit: body positioning and angular velocities

- Motion capture gloves: detailed hand pose and finger/hand motion

- Eye-tracking smart glasses: POV video, gaze tracking and depth sensing

- Force-sensing gloves: grip strength/contact force signals

- (Optional) 3D action camera: additional depth/scene reference in targeted sequences

3) Monitoring

To ensure that all the work was accounted for and kept on track we provided:

- Documentation covering calibration procedures, data collection guidelines and hardware setup

- Weekly delivery reports

The results: synchronized sensor data ready for robotics AI

Over the course of the pilot, Defined.ai delivered 225 hours of finished, synchronized sensor data split evenly across the three collection kits. This gave the client a substantial, consistent foundation to start training and evaluating robotics AI models with confidence. Instead of piecing together fragmented recordings, the client now has a coherent, multimodal dataset designed for real-world physical AI development. That makes it easier to move faster on manipulation learning for humanoid robots; strengthen robotics AI pipelines that combine perception and control; and build more reliable training signals from egocentric video, depth cues, kinematics and force channels.

Just as importantly, the dataset is structured to minimize rework, so teams can spend less time cleaning and aligning data and more time improving model performance.

Building robotics AI systems and need synchronized sensor data that’s ready to train on? Book a call with one of our data collection experts to find out how we can help, from scoping a pilot to scaling into production, or check out our data marketplace for AI-ready robotics datasets.